Those in charge of building and maintaining landing pages spend a lot of their time ‘optimizing’ them. The key metric here is the conversion rate: what proportion of visitors who view this page give you their email, start a free trial, or engage with your site in some other way such that you can begin the process of selling them your wares? Dozens of services (e.g., Optimizely) have sprouted to help marketers perform live experimentation (A/B testing) in the hopes of inching that conversion rate higher and higher over time.

In most cases, marketers focus on one or two landing pages for their company — running their experiments, watching how their customers interact with the page, and hoping to drive up conversion rates.

Problem: How to Optimize Conversion Rates for a Website Audit Tool

Our team recently had the opportunity to do something slightly different in a project for MySiteAuditor. MySiteAuditor is a software-as-a-service company that offers digital marketing agencies a website audit tool to embed on their own landing pages.

Here's the idea. A customer of MSA, call them Example Marketing Agency, gets the tool, and places it on their website. When visitors go to Example Marketing Agency’s website, they see this tool, and use it to perform an SEO audit their own website. In doing so, the visitor has to send their contact information to Example Marketing Agency. Example Marketing Agency now has a new lead.

What we learned in a previous customer research project with MySiteAuditor is that how long a customer stays with MSA is heavily dependent on the leads their audit tool gets them. Customers who see high conversion rates with the audit tool tend to stick around for a long time, but customers with low conversion rates leave and presumably go try some other strategy to generate sales leads.

Our Solution: Improve Your Customer's Customer Retention

This led us to propose an idea to MySiteAuditor. Rather than having each of their customers trying to optimize the conversion rates of their own tools (in their silos), why don’t we do some research to figure out what can boost conversion rates for all MySiteAuditor’s customers (i.e., over ten thousand landing pages)?

We pitched this as a win-win: MySiteAuditor’s customers would be happy because they are generating more leads. In turn, MySiteAuditor is happy because their customers are staying longer. And — bonus win! — we can help MySiteAuditor with a great blog post sharing these results to help attract new customers to the product. MySiteAuditor agreed, and off we went!

Auditing and analyzing

We began by sourcing our data. We couldn’t look at each of the landing pages one-by-one like the typical marketer, so we needed some way of gathering data automatically from each landing page. What we ended up doing was using MySiteAuditor’s own audit engine to analyze the landing pages with the audit tool on it, and using that data (encompassing page features, loading speed, content metrics, etc.) for our analysis.

(For those reading closely, you might notice this is becoming very meta: we're auditing audit-web-pages using our client’s audit-tool in order to help our customer’s customers gain customers.)

We ended up with a dataset containing one record for each landing page. Each record included the conversion rate of the landing page, total traffic to the landing page, and just under 100 features of the landing page, including the number of words/images/links on the page, its loading time, length of its URL, distance of the page from the domain root, and page authority.

Now it was time to break out the machine learning algorithms. Using just these page features — not anything about the company, their marketing strategies, the semantics of the content on the page, etc. — can we predict conversion rates?

We’ve recently been using gradient boosting models in cases like this because, unless you start tailoring their parameters to a given dataset, they generally offer robust, generalizable predictions. Most importantly for our present problem, they avoid overfitting. With about 100 features being used as predictors, this is a big plus!

GBMs also offer a straightforward way to determine the relative importance of each of the final model’s features: sum up all the absolute error decreases that come from splitting by that feature, and divide that by the absolute error decreased from splitting by any feature (Friedman, 2001, pdf here).

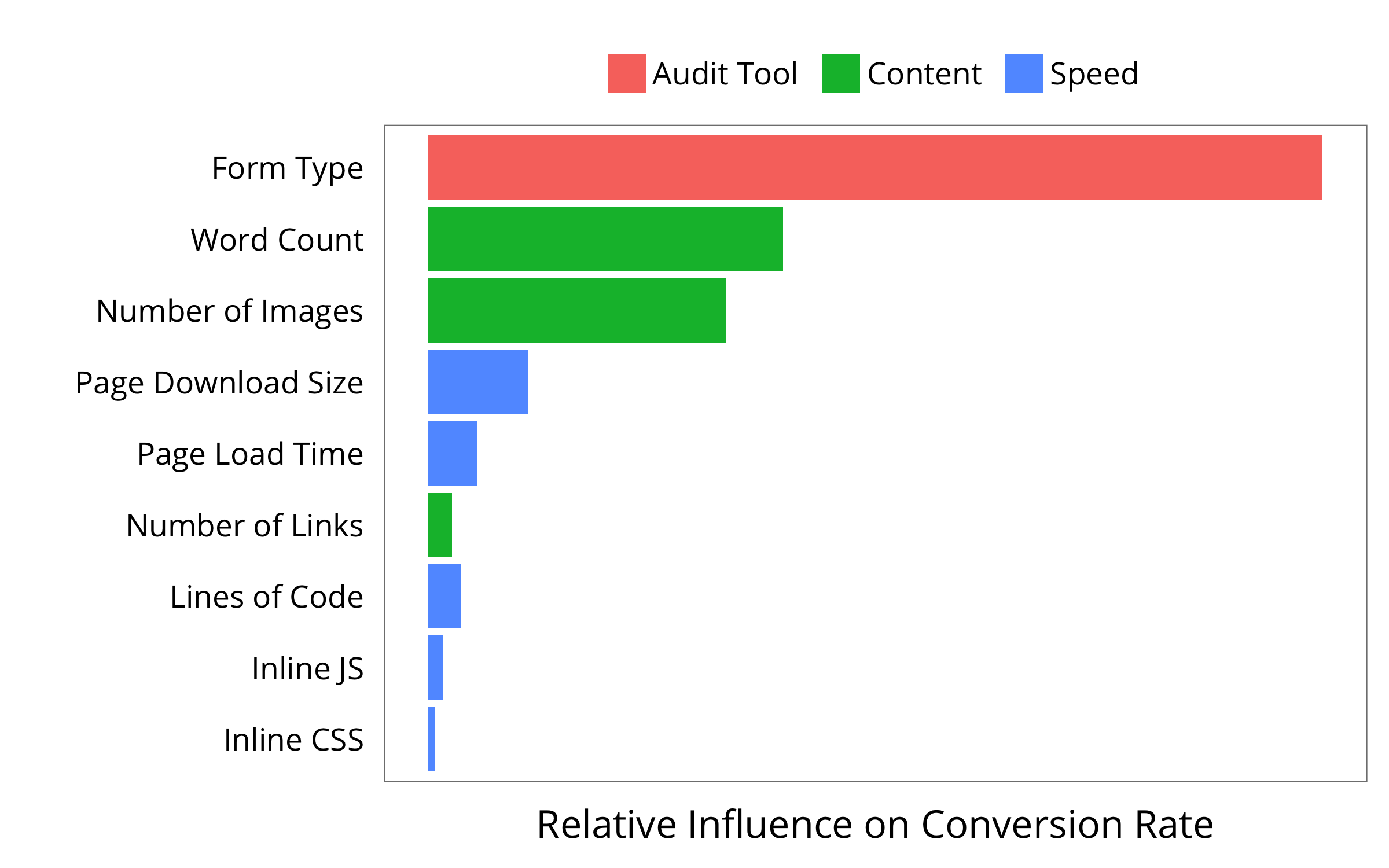

This model yielded 9 features that clustered really neatly into 3 conceptual categories: the kind of audit tool they were using, the content on the page, and the page loading speed. We can plot these categorized features by their relative importance in the model:

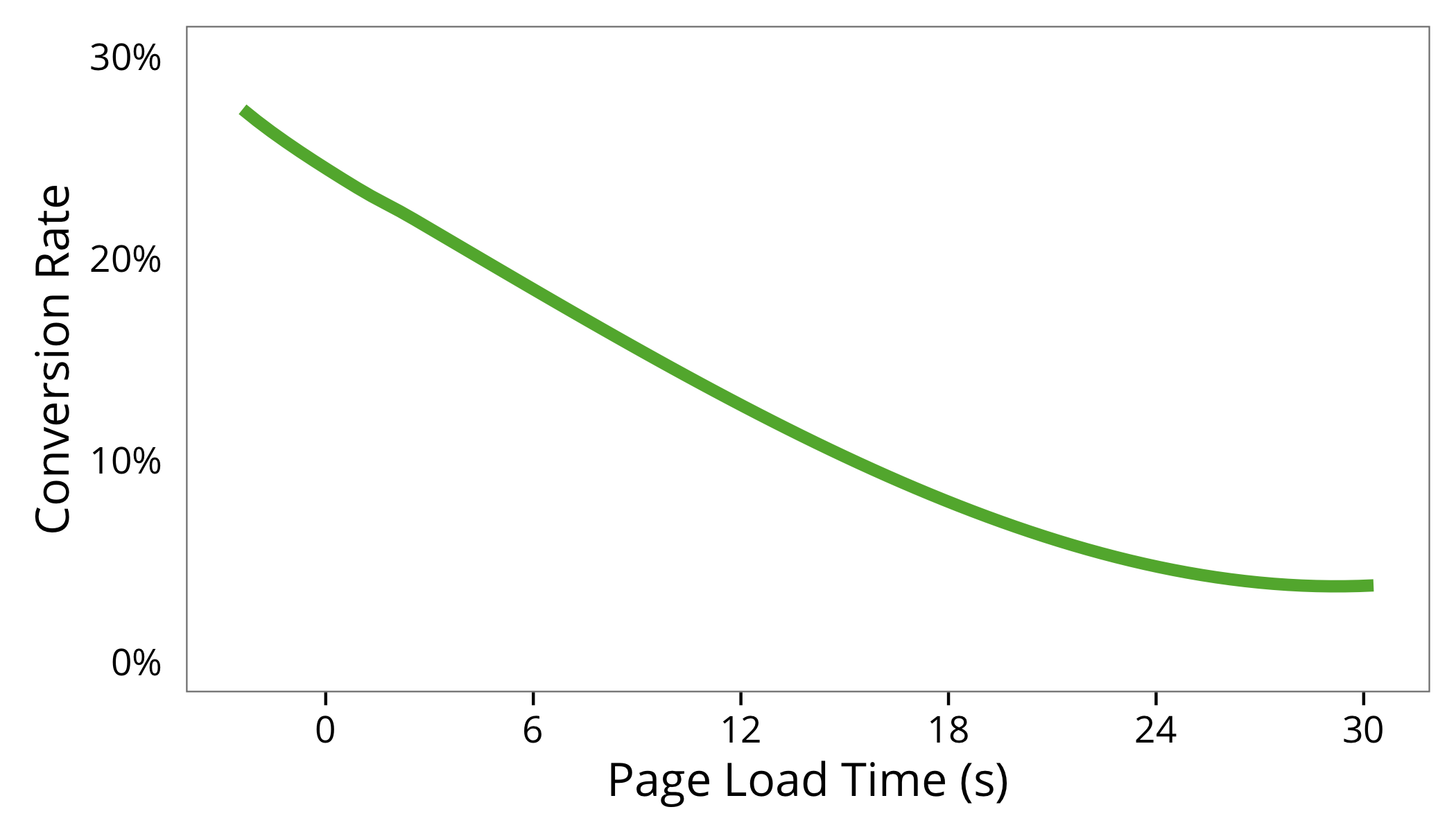

These importance-ratings in GBMs don't actually tell us about the direction of the relation between each feature and conversion rates. But this isn't a huge obstacle for our purposes: many of the relations are intuitive and easily visualized. Take, for instance, the link between page-loading-speed and conversion-rates. Here's that link, visualized:

For every additional second a user had to wait for the page to load, their probability of conversion dropped by 1%! This was a standout finding to us—and something that any digital marketer in charge of landing pages should know.

We ultimately worked with MySiteAuditor to prepare a blog post about our findings, aimed at digital marketers.

Final Notes

We couldn’t have anticipated cleaner results: not only are these results easy to understand (boiling down to three main components), but the features we identified are all very manipulable by MySiteAuditor’s customers.

This is good news for two reasons: First, it gives them the chance to act on this information to improve their conversion rates (and stay happy MySiteAuditor customers forever). Second, it will allow us to do some later A/B testing between customers who act on this information. Sure, customers with faster landing pages get more conversions. But is this a causal link, or just correlation? If we take an individual website, and speed up their landing page, does this actually boost conversions? A/B tests can help decide on this crucial causal question.

At the time of this post, things appear promising. The day after MySiteAuditor posted the blog post, their customers’ average conversion rate hit an annual high.